CNA Explains: How to spot the telltale signs of a deepfake

How easy is it to make one? Can you avoid being deepfaked? An AI expert answers these questions and more.

One of these images is a deepfake. Can you tell which? (Answer: On the left.) (Screengrab: CNA video).

This audio is generated by an AI tool.

SINGAPORE: Amid growing global concern, Singapore has proposed a law to ban deepfakes of candidates during elections.

The rise of such content - digitally manipulated to look or sound like someone else - has contributed to the increasing difficulty of determining if something online is authentic.

Deepfakes have been used in scams involving political office holders – from United States president Joe Biden to Singapore’s past and present Prime Ministers – as well as celebrities like Taylor Swift. They’ve also been used to generate pornographic material – which make up more than 90 per cent of deepfake videos online.

Ms Ong Si Ci, a lead engineer in Artificial Intelligence and machine learning at Singapore’s HTX, or Home Team Science and Technology Agency, breaks down for CNA the ways to spot a deepfake, how to protect oneself from becoming a victim, and the effectiveness of banning such altered content.

How to identify deepfakes?

They typically have a few telltale signs, according to Ms Ong:

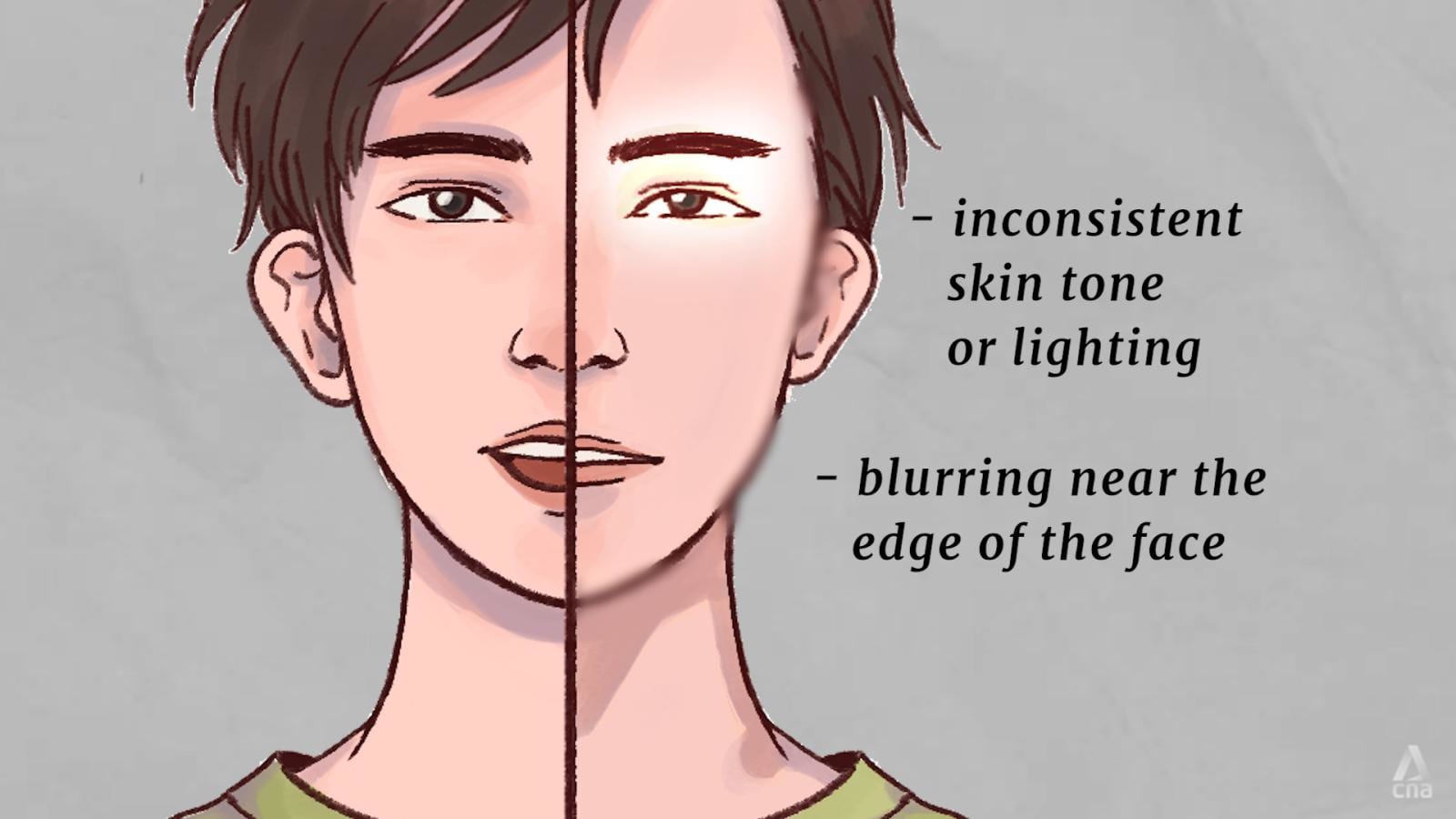

- Excessive blurring or inconsistencies around the edges of a face

- Inconsistent skin tone or lighting around the face, compared with other regions of the face or surrounding conditions

- Poor synchronisation between audio and video

- In a speech, an unnatural, monotonous way of speaking

Yet these signs are also becoming increasingly hard to spot, as online tools get better and more powerful at generating very realistic deepfakes, said Ms Ong.

If unsure, the next best thing is to try to verify the content against other trusted media sources. Another alternative is to report to the online or social media platform in question.

How easy is it to make a deepfake?

Making a deepfake video of an individual once required thousands of images of the person. Now, one image is enough to make a deepfake face swap video.

For another type of deepfake – lip sync videos – all that’s needed is a short recording to train a model to generate audio that sounds just like the person, said Ms Ong.

She added that a 10 second deepfake clip could be put together in less than 30 seconds.

How to avoid becoming a victim?

It’s getting harder to do so in this day and age, with the amount of content being put up online, said Ms Ong. “Unless people don't post anything on social media (and don’t) share your content – your video or your image or your audio of yourself.”

Even “private” social media accounts aren’t all that private when they can be accessed through friends or contacts, she pointed out.

The danger of using commercial or proprietary apps – such as Facebook – is that uploaded data gets stored in their servers. In other words, an uploaded picture runs the risk of being online for possibly forever, said Ms Ong.

“It's really important to read the fine print of the terms and conditions, on how they deal with the data as well as the data privacy policies,” she noted, adding that deepfake victims should also go to the police.

Can deepfakes just be banned?

The sheer amount of content out there makes it difficult for platforms to implement a blanket ban, said Ms Ong.

“This will require them to run through and basically check every single source that's been uploaded. And that will require a lot of computational resources, which can be really expensive.”

A temporary ban is what Singapore is suggesting instead, and during elections which the country must hold by November 2025.

The proposed law would only apply to online election advertising depicting people running as candidates.

It would be a criminal offence to publish, republish, share or repost content deemed prohibited. Authorities can ask individuals, social media platforms and internet service providers to take down or disable access to such content.

Social media firms that don’t comply could be fined up to S$1 million. For all others, it’s a possible penalty of up to S$1,000, jail of up to 12 months or both.

The ban, however, would not cover content in the likes of one-to-one online conversations or private group chats.

Ms Ong pointed out that deepfakes, just like AI or any technology for that matter, are also a double-edged sword: They can be deployed for malicious purposes but there are also “good use cases” in the business, entertainment and healthcare industries among others.

She pointed to the example of actor Val Kilmer, who lost his voice to throat cancer. To film his scenes in the 2022 Top Gun movie sequel, past recordings were used to create deepfake audio to “give him back his voice”.

What else is Singapore doing about deepfakes?

Beyond elections, the Infocomm Media Development Authority (IMDA) will introduce a new, legally binding code of practice requiring social media platforms to implement measures to deal with deepfakes.

Singapore also has a law against misinformation and fake news – the Protection from Online Falsehoods and Manipulation Act.

Regulations aside, the country's HTX government statutory board has developed a deepfake detector, albeit one unavailable to the public.

The AlchemiX software is exploring what Ms Ong called a “physics-based” approach to assess content. That’s in contrast to AI generators which don’t understand the laws of physics.

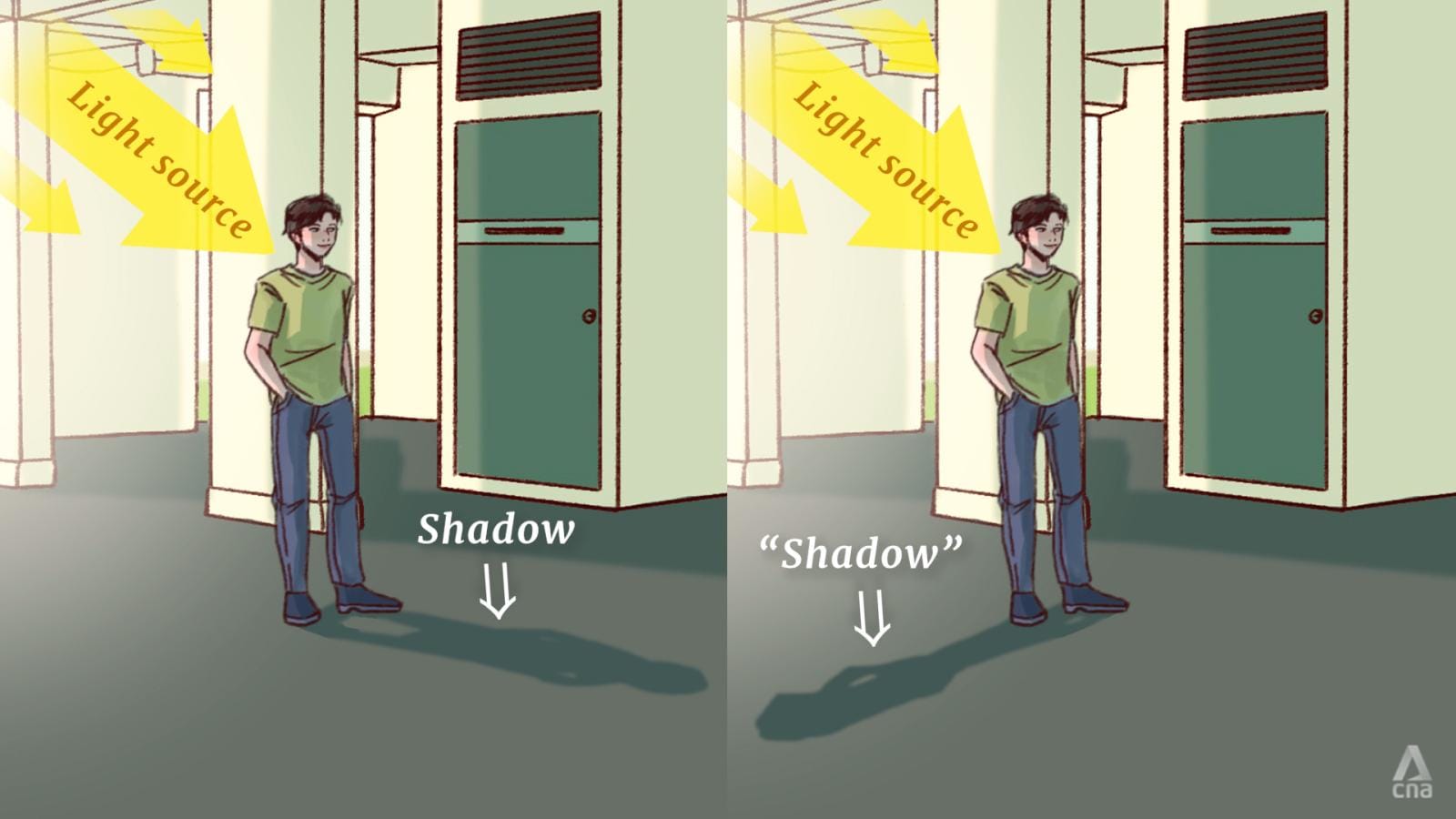

“For example, in certain images or inside of videos, if the light source is actually coming from in front of the person, it means that the shadow should be cast behind the person,” she explained.

“But in certain images the shadow is actually generated in front of the person, which is inconsistent with the light source.”