CNA Explains: Google has a new quantum computing chip – what's special about it?

How does the quantum computing chip Willow work, and are there any real-world applications?

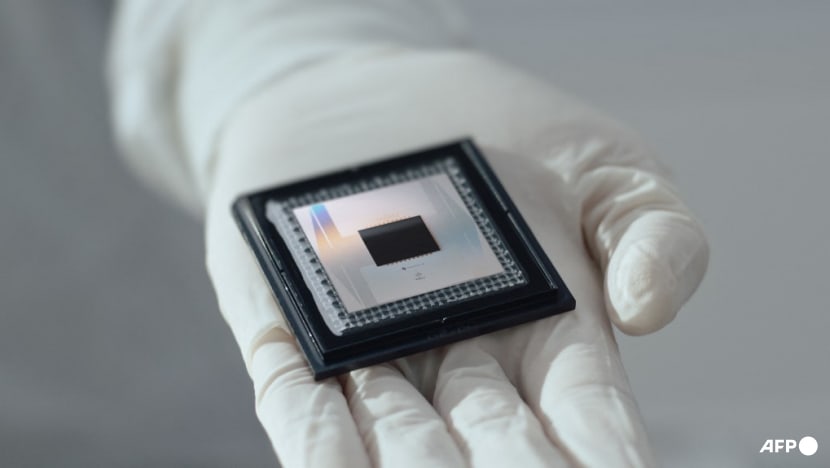

Google's new quantum computing chip Willow on Dec 9, 2024. (Photo: AFP/Google)

This audio is generated by an AI tool.

SINGAPORE: Google on Monday (Dec 9) announced a new chip called Willow, hailing it as a major advancement in the realm of quantum computing.

What is Willow?

In essence, it is a chip that can solve complex problems at unprecedented speeds.

As a measure of Willow's performance, Google used the random circuit sampling (RCS) benchmark. This is classically the hardest benchmark that can be done on a quantum computer.

Its performance on this benchmark was "astonishing", said Google, adding that the new chip performed a computation in under five minutes.

This computation would have taken one of the fastest supercomputers in the world 10 septillion years.

“The rapidly growing gap shows that quantum processors are peeling away at a double exponential rate and will continue to vastly outperform classical computers as we scale up,” said Google.

How does it work?

While classical computers rely on binary bits - zeros or ones - to store and process data, quantum computers can encode even more data at once using quantum bits, or qubits.

These qubits are the building blocks of quantum computers. Qubits are fast, but error-prone because they can be jostled by something as small as a subatomic particle from events in outer space.

As more qubits are packed into a chip, those errors can add up - making the chip no better than a normal computer chip. Willow has 105 qubits.

However, Google said the more qubits it used in Willow, the more it reduced errors.

"Each time, using our latest advances in quantum error correction, we were able to cut the error rate in half," said the tech giant.

"In other words, we achieved an exponential reduction in the error rate.

"This historic accomplishment is known in the field as 'below threshold' – being able to drive errors down while scaling up the number of qubits."

Is Willow a significant development?

Google said there were other scientific "firsts" in its test results. For example, it was one of the first compelling examples of real-time error correction on a superconducting quantum system.

This is crucial for any useful computation because if errors cannot be corrected fast enough, they ruin the computation.

"As the first system below threshold, this is the most convincing prototype for a scalable logical qubit built to date.

"It’s a strong sign that useful, very large quantum computers can indeed be built," said the company.

It added that the milestones achieved by Willow would pave the way for running practical and commercially relevant algorithms that cannot be replicated on conventional computers.

Founder and CEO of Horizon Quantum Computing Dr Joe Fitzsimons told CNA that the test results achieved what has been a major goal in the field for over 25 years.

In addition to demonstrating real-time error correction, they also represent a strengthening of Google's claim that the chips are hard to simulate with conventional computers.

"There have been several such claims since 2019, and there is a back and forth between improved conventional computing methods and improved quantum processors," Dr Fitzsimons added.

"Showing that the chips are hard to simulate is a necessary step on the path to showing that they can solve real-world problems more efficiently than conventional computers, but it is not the final step on that path."

Related:

Are there real-world applications?

Not at the moment, Google acknowledged.

It said it is "optimistic" that the Willow generation of chips can help move the field towards a first "useful, beyond-classical" computation that is relevant to a real-world application.

"On the one hand, we’ve run the RCS benchmark, which measures performance against classical computers but has no known real-world applications," said the company.

"On the other hand, we’ve done scientifically interesting simulations of quantum systems, which have led to new scientific discoveries but are still within the reach of classical computers."

The goal is to do both at the same time - to solve algorithms that are beyond the reach of conventional computers and that are useful in the real world.

To the layman, this could take the form of discovering new medicines, designing more efficient batteries for electric cars, or accelerating progress in fusion and new energy alternatives, said Google.

"Many of these future game-changing applications won’t be feasible on classical computers; they’re waiting to be unlocked with quantum computing," the company added.

Theoretical physicist Sabine Hossenfelder wrote on X that "while the announcement is super impressive from a scientific point-of-view (POV) and all, the consequences for everyday life are zero".

"Estimates say that we will need about 1 million qubits for practically useful applications and we're still about 1 million qubits away from that," she added.

What are the challenges in quantum computing?

Mainstream adoption of quantum computing has been hindered by issues of scalability, hardware limitations, high costs and accessibility.

Another difficulty lies in the construction of quantum computer hardware.

Google's Willow chip is based on technology that requires intense cooling, which could be a limiting factor in scaling up.

“It may be fundamentally hard to build quantum computers ... as cooling so many qubits to the required temperature – close to absolute zero – would be hard or impossible,” Winfried Hensinger, professor of quantum technologies at the University of Sussex, told CNBC.

Dr Fitzsimons also noted that the error rate of quantum processors is much higher than conventional processors.

This makes quantum computation very unreliable for calculations that require more than a handful of operations, he said.

"The Google experiment has shown that it is now possible to suppress errors faster than they occur, preventing them from building up and swamping a calculation on a quantum computer," he added.

While a major milestone, the experiment only reduced the error rate by half, Dr Fitzsimons pointed out - and so more progress is needed in the quest for error-free computation.