Commentary: Could AI like ChatGPT replace human counsellors and therapists?

Companies and government agencies have rolled out AI chatbots to address employees’ mental health concerns, but such initiatives might miss the bigger picture, says Maria Hennessy of James Cook University, Singapore.

SINGAPORE: With burnout on the rise, improving access to mental health support is among the top priorities of employers. At the same time, new chatbots like ChatGPT are making headlines for their uncanny conversational capabilities, enabled by advances in artificial intelligence (AI) and natural language processing.

Can this latest wave of digital tech address declining mental health at the workplace?

It's hard to resist the lure of your own personal AI friend, especially if you grew up with Astro Boy or Optimus Prime. We already interact with AI chatbots like Siri on our iPhones, although admittedly Siri is not as fun as having a Transformer follow you around the house.

AI chatbots are able to learn and use language more efficiently and effectively than their earlier versions. This gives them a significant advantage in being able to communicate with us in a way that seems more natural and can even appear to show empathy and understanding.

AI CHATBOTS FOR MENTAL HEALTH

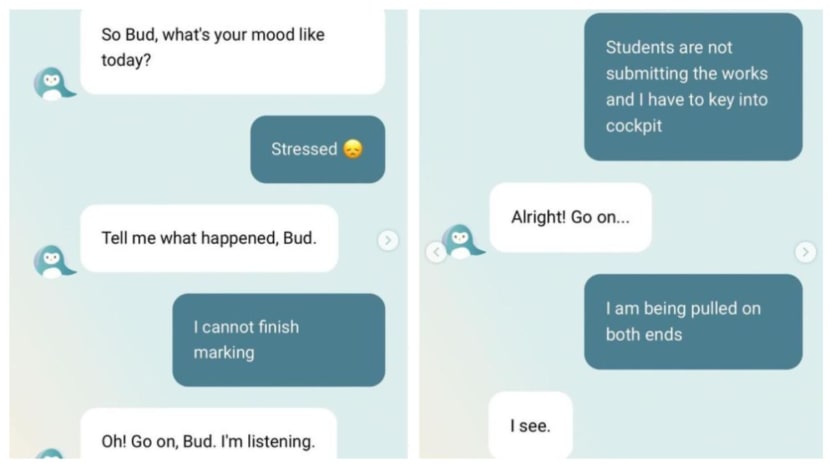

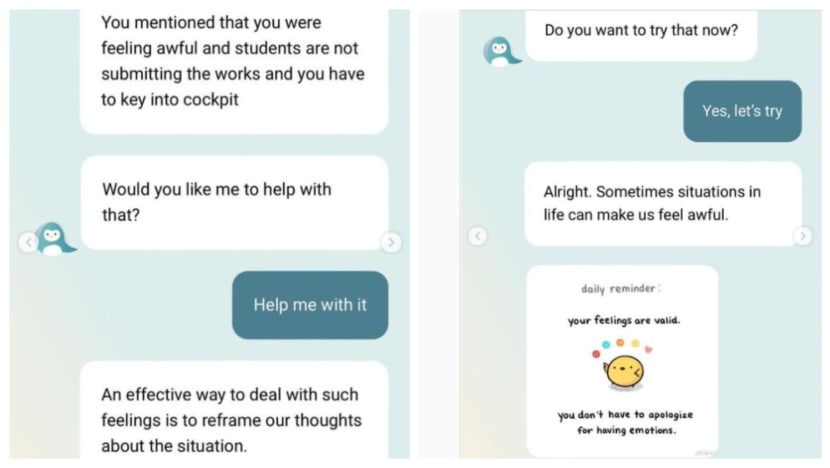

Companies and government agencies have embraced the potential of AI chatbots, rolling them out as a resource for employees. Take for example the use of Wysa by the Ministry of Education to reduce stress in the teaching workforce.

LISTEN - Heart of the Matter: Is ChatGPT the start of a big revolution in education?

According to a survey published by the Singapore Counselling Centre in 2021, about 56 per cent of teachers said they were overwhelmed with their jobs. They cited long hours, with 80 per cent working more than 45 hours a week.

When faced with these issues, organisations tend to jump to the individual worker to fix the problem through workplace wellness programmes or initiatives. As seeing a psychologist or counsellor can be costly and involve long waiting times, the use of AI chatbots is seen as a means of supporting mental health and wellbeing.

However, in the current state of play, AI mental health chatbots provide the illusion of help, and there is a lack of evidence demonstrating their effectiveness and safety in mental health.

Their communication skills are fairly limited, as is their therapeutic advice. It’s hard to feel connected with a mental health chatbot that starts its conversation with the greeting “Hey Bud” as the MOE Wysa chatbot does.

Users have noted that the chatbot’s responses are basic counselling phrases and sometimes miss the point of the conversation entirely.

At the moment, AI chatbots are little better than Google for information. They certainly can’t provide the level of connection and understanding that we need when we reach out with a mental health concern.

In conversation with someone who has tried Wysa, a special education teacher (who wishes to remain anonymous) shared, “While I can see that it could have benefits in being able to access it anytime, it does not seem like a valid response to teacher stress.

“Teachers are relational by nature, and being quite an autonomous vocation, in that you are often the only adult in the room with kids, being able to vent or talk through daily stresses with another adult is worth its weight in gold."

WICKED PROBLEMS NEED SYSTEMS-LEVEL INTERVENTIONS

Mental health in the workplace is a wicked problem, one that is complex with no clear solution. It helps to think about organisational well-being as layers of a system: The individual level (Me), the group level (We) and the organisational level (Us).

Any mental health and well-being initiative in a workplace needs to target all layers of this interactive and dynamic system, or their effects are not sustained.

This is particularly important in education, as teachers’ mental health should not be thought of as something that the individual is solely responsible for. It should be a shared concern for our communities and schools (We), and our local and global education systems (Us).

At the “We” level, parents in Singapore may benefit from increased access to positive parenting programmes such as Triple P and parent-teacher sessions to understand the boundaries of what to expect from schools.

Peer supervision is commonly practised in other jobs - this could be introduced for Singapore teachers to support their professional competence and mental health.

At the “Us” level, academic achievement is an important goal of the Singapore education system, though schools are moving towards supporting students' well-being and holistic development.

But how can we expect adults to understand work-life balance when they may not experience this as children? Do we need so much homework, co-curricular activities and time spent at learning centres after school? Would the mental health of both students and teachers benefit from time to relax, explore and enjoy?

These are broader societal questions that do not have easy answers, but we need an open and curious space to discuss them.

If a workplace targets only the individual, such as providing an AI chatbot for staff, they are missing the bigger picture of how education systems impact the mental health of its teaching workforce, along with student well-being. Teachers live and work within complex, demanding environments, so interventions must leverage the system to support sustained change.

CAVEAT EMPTOR

The intentions here are good ones. Digital technologies do provide timely access to mental health information at a reduced cost, and make service provision more efficient.

What’s more, the AI mental health industry is unregulated and has no guidelines to keep consumers safe. Would you buy a car that didn’t meet industry standards? Or food that hadn’t been processed properly and may cause illness? As the space is filled with health tech entrepreneurs who do not have to comply with regulations yet, buyers should beware.

Initiatives to support teacher mental health and well-being work best as part of a system-wide solution to the problem. From this perspective, an AI chatbot is doomed to failure anyway, notwithstanding their inherent limitations at the moment.

They can’t be funny, creative, understanding or caring - and that’s what we need when we reach out for help. And the jury is still out on their effectiveness in improving individual mental health.

Dr Maria Hennessy is an Associate Professor in Clinical Psychology at James Cook University, Singapore.